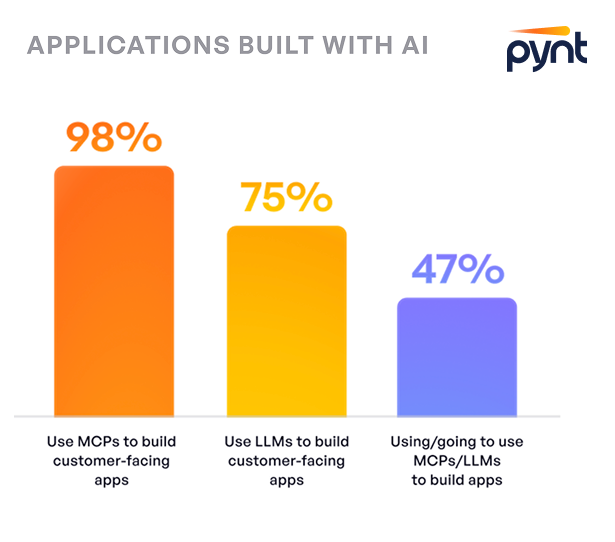

Large Language Models (LLMs) like GPT-4 have redefined human-computer interactions, providing unprecedented capabilities in generating human-like text and conducting complex dialogues. This technology has catalyzed the development of many platforms, which interprets LLM outputs to execute commands, further expanding the practical applications of LLMs in various sectors.

Advancements and Capabilities of LLMs

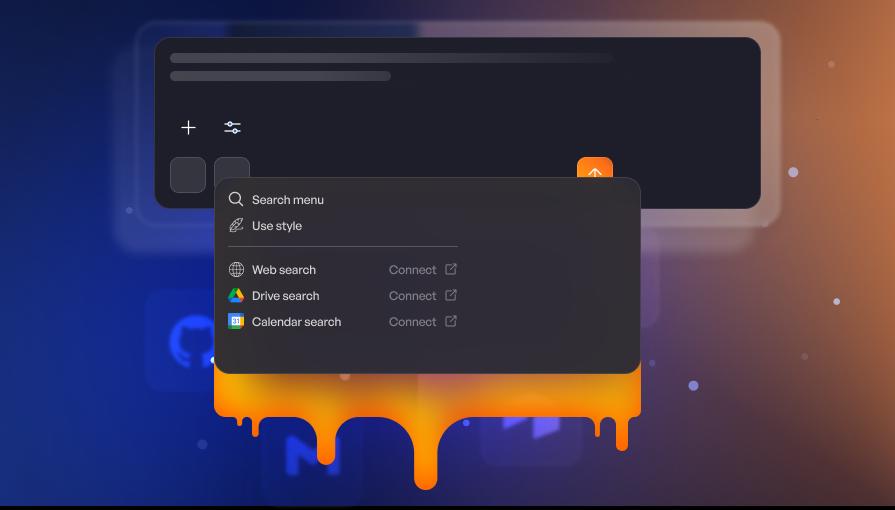

LLMs have progressed beyond mere text generation; they now facilitate intricate tasks that blend Generative AI's analytical prowess with nuanced human-like interaction. A typical platform exemplifies this by transforming LLM outputs into actionable commands, thereby extending the utility of LLMs from conversation agents to proactive task handlers.

This evolution allows businesses to automate more sophisticated services that traditionally required human oversight, such as customer support, personalized content creation, and complex problem-solving scenarios (e.g. AI Voicebots, for instance, can autonomously handle customer service inquiries, reducing the need for human agents and improving operational efficiency). The integration of LLMs into business processes is poised to enhance efficiency, reduce operational costs, and provide more engaging user experiences.

Security Implications of LLMs

With technological advancements come new challenges, particularly in terms of security. One significant concern is the vulnerability to "prompt injection" attacks, where malicious inputs can manipulate LLM behavior. This risk necessitates robust security frameworks to safeguard against unauthorized influence over LLM actions.

You can find more realistic prompt injection examples in this tutorial video.

Prompt injection represents just one facet of potential security threats associated with LLMs. As these models become more integral to business operations and interact more frequently with sensitive data, ensuring their integrity and security becomes paramount. The development of comprehensive security protocols and regular updates to AI models to address emerging threats is crucial.

Regulatory and Ethical Considerations

As LLMs grow in capability and influence, they also attract attention from regulatory bodies interested in ensuring these technologies are used responsibly. Discussions around data privacy, ethical AI use, and transparency in AI operations are intensifying, with implications for how LLMs are deployed and managed.

Organizations like OWASP are actively developing guidelines and best practices to address these concerns, including the formulation of a top 10 list of security risks associated with LLMs. This ongoing work will help shape the standards and expectations for future deployments of LLM technologies. OWASP is currently in the process of curating a top 10 list for it, which could be found here.

The Future of LLMs

Looking ahead, the trajectory of LLM technology points towards more integrated and autonomous systems capable of undertaking a broader range of activities with minimal human input. Innovations such as blockchain integration for secure API transactions and the use of machine learning in threat detection are expected to further enhance the capabilities and security of LLMs.

As LLMs continue to evolve, they will play a crucial role in the digital transformation journeys of many organizations, driving innovation and new capabilities in numerous industries.

Learn More and Explore

We at Pynt, believe that such issues should be discovered where it can be solved- at the developer desk. This approach allows for integration of powerful LLM-based new tools without introducing new weaknesses to the applications.

For those interested in understanding the full potential and implications of Large Language Models, including their integration into your existing systems or how to mitigate associated risks, visit Pynt's related guide. Here, you can explore detailed resources and gain insights into how to effectively implement and secure LLM technologies in your operations.

.svg)

.png)