Developers have never moved faster.

In less than two years, LLMs and MCPs have gone from experimental to essential, transforming how software is built, tested, and deployed.

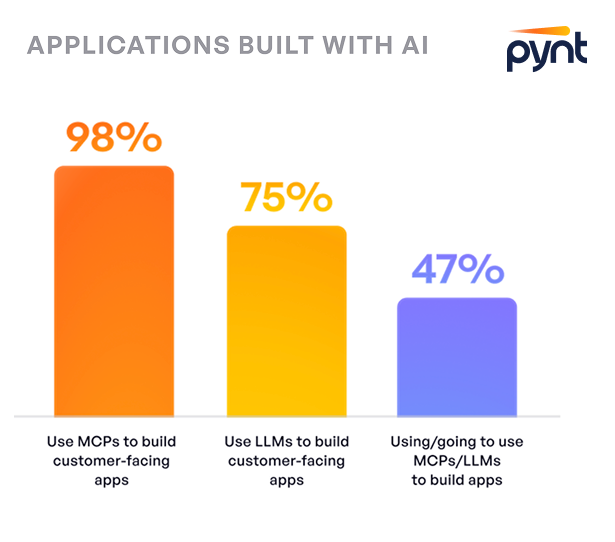

Our new research, The GenAI Application Security Report, based on a survey of 250 engineering and security leaders, reveals the scale of this shift:

98% of organizations are adopting GenAI tools, integrating them deeply into their products and workflows. Only 2% said security risks stopped them from doing so.

The data shows what anyone in engineering can already feel: GenAI isn’t a future trend, it’s the present reality. But hidden beneath this wave of innovation is a growing tension that will define how software is built in the years ahead: freedom versus dependence.

There a new control panel in town

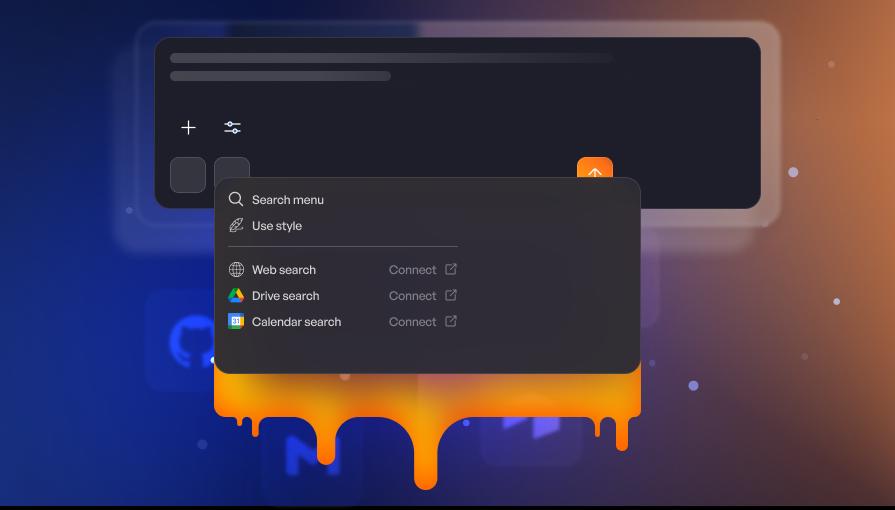

If LLMs are the brains of modern applications, MCPs are the nervous system. They manage context, orchestrate actions, and connect AI models with the APIs and tools they need to perform.

It’s a powerful model. Instead of static applications with fixed logic, we now have adaptive systems. Applications that dynamically call APIs, trigger chains of requests, and make real-time decisions based on language prompts and context windows.

In our survey, nearly half of organizations said they already rely on third-party MCP servers, while another third have built their own. That means MCPs are no longer in the niche of early adopters, they’re quickly becoming the control plane of modern software, dictating how information flows, what actions are allowed, and how systems interact.

But that control doesn’t necessarily belong to developers anymore.

Developers freedom is quietly declining

Every time an MCP coordinates between an LLM, an API, and a database, a small piece of developer control disappears.

Developers are no longer defining every endpoint or controlling every function. They’re orchestrating behavior across layers of infrastructure that are abstracted away, dynamically managed, and often owned by someone else.

That abstraction comes at a cost. We’re entering an era where developers can move faster, but they understand less about what’s actually happening beneath the surface. A single prompt or agent action can trigger dozens of hidden API calls, each carrying potential data, performance, or security risks.

In other words, the limits of what developers can do will be set less by their imagination, and more by the protocols their applications depend on.

Security have become the bottleneck

Traditional AppSec tools were built for a simpler world with predictable endpoints, fixed logic, and static inputs. GenAI breaks that model completely.

Every LLM or MCP interaction opens new, dynamic API surfaces.

A single GenAI workflow can involve a dozen internal and external API calls, many of them generated or modified in real time.

Legacy tools like WAFs, DAST, and SAST can’t keep up with that level of fluidity.

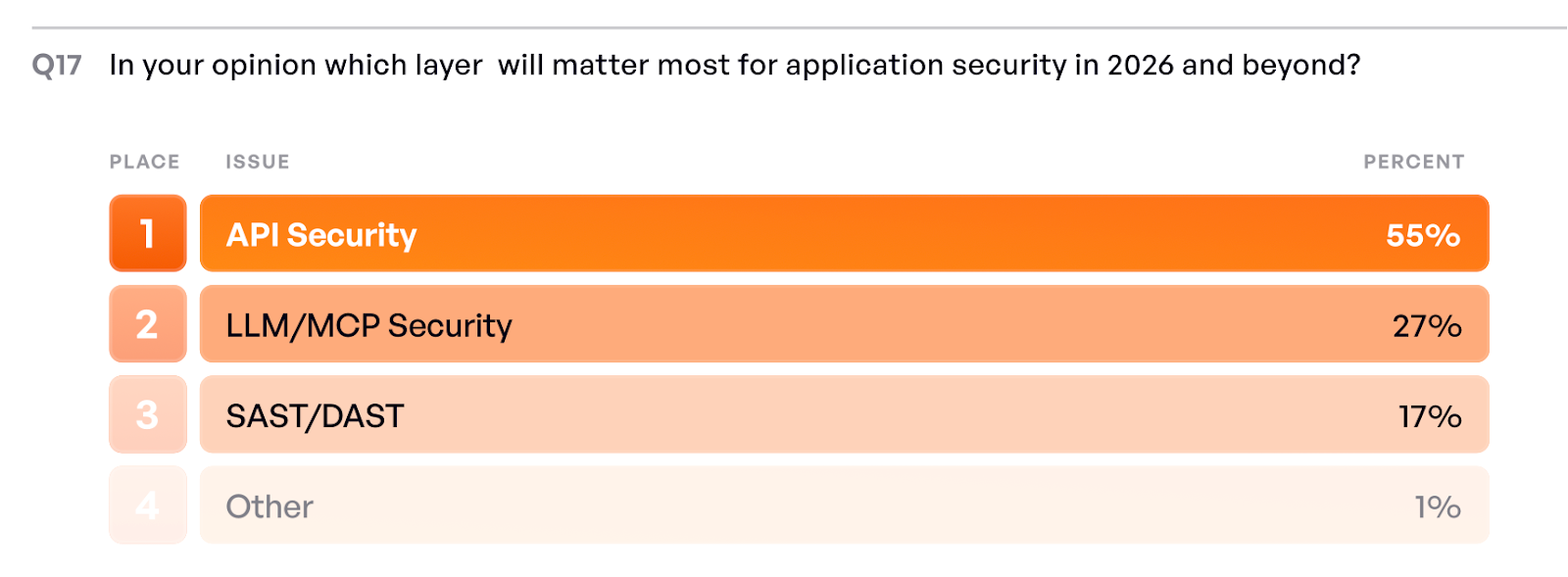

Our survey shows how security leaders are reacting:

Ninety-five percent are already integrating LLMs or MCPs.

Of the small group holding back, 49 percent cite security concerns as the main reason.

Fifty-five percent ranked API security as their top AppSec priority for 2026, higher than any other area.

The problem wasn’t solved before. With AI, it’s exploding.

2026: The year of controlled acceleration

By 2026, enterprises will depend on GenAI. But this new layer will come with new rules. Governance, visibility, and automated testing will become prerequisites for innovation. The companies that adapt fastest won’t be the ones building the most agents, they’ll be the ones who secure the infrastructure those agents rely on. Freedom will return when transparency does.

Developers’ role will be challenged - perhaps it’s not about writing more code, but understanding how AI writes and executes code. Without it, every innovation introduces an invisible chain of dependencies no one fully controls.

GenAI is probably here to stay, it will power the next generation of applications, making our mission to restore freedom through visibility - proper discovery and risk detection for every LLM or MCP integrated into our systems.

Download the full report here.

.svg)

.png)