Remember when security testing meant scanning a few web pages for XSS and SQL injections?

That was the DAST era - simple, predictable, and surface-level.

Then came APIs. Suddenly, everything had an endpoint. Business logic and customer data moved behind thousands of APIs - often undocumented, often duplicated. That’s when API discovery and API security testing became essential. We needed to know what was really exposed and how it behaved under pressure.

Fast-forward to today, and the next wave is already here.

Applications are no longer just running logic; they’re thinking.

LLMs - ChatGPT, Claude, Gemini, and the dozens of private models behind enterprise copilots - have become the new interface between humans and business systems.

The New, Invisible LLM Attack Surface

LLMs are quietly becoming part of every company’s digital fabric.

- A chatbot answering support questions

- A developer assistant connected to internal APIs

- An AI agent summarizing tickets or interacting with production data

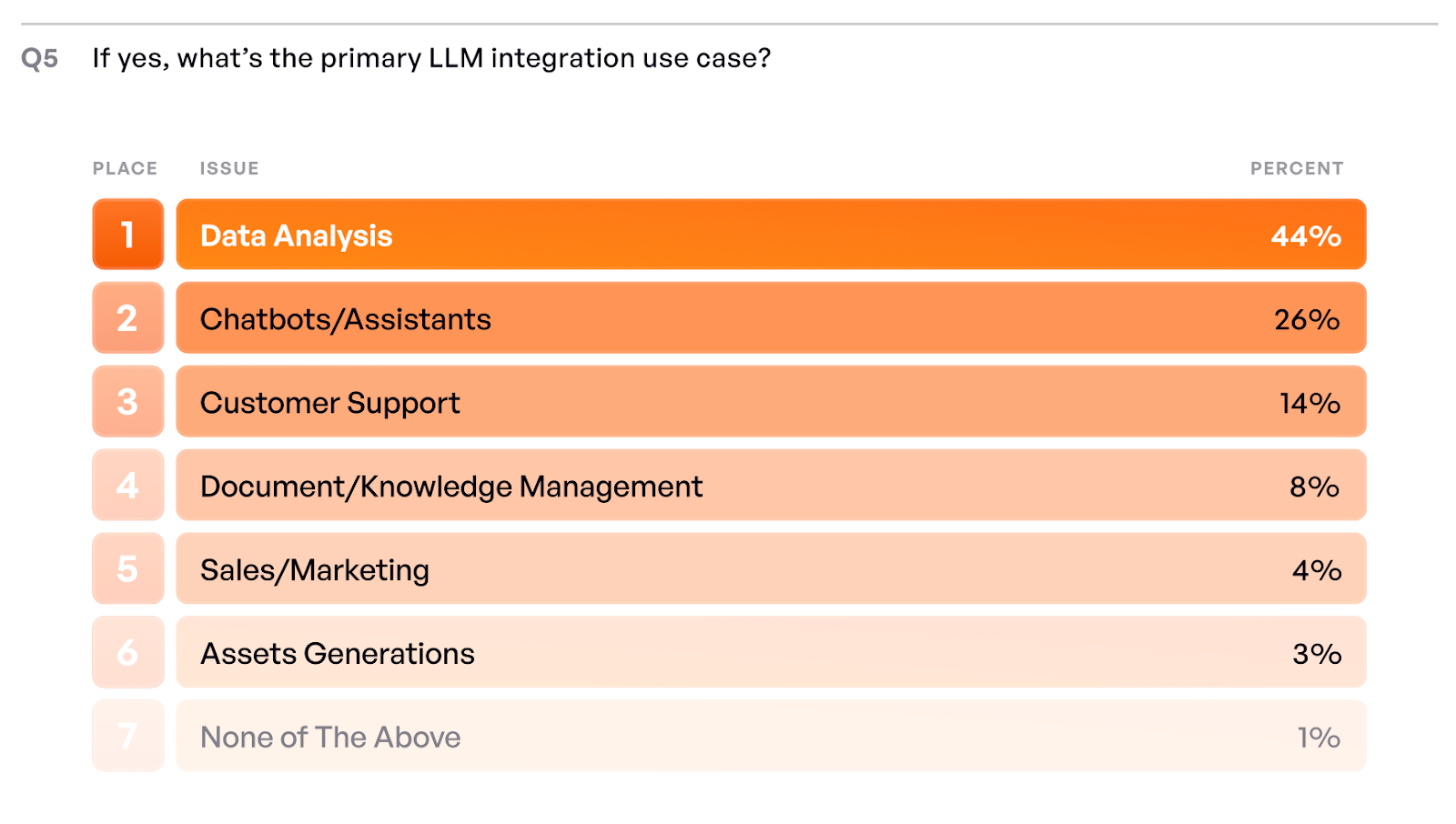

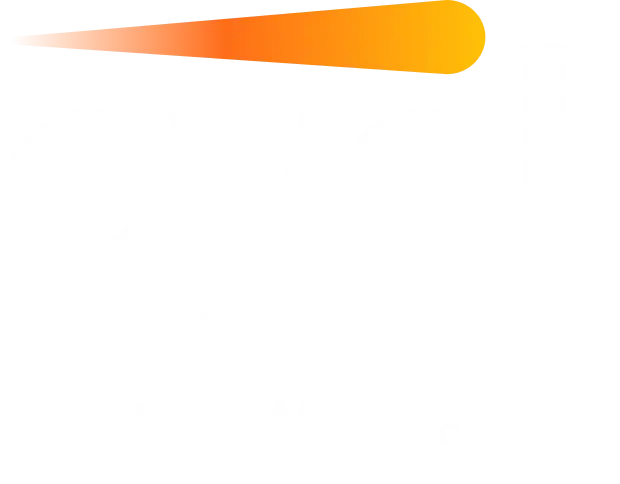

Our most recent research, the GenAI Application Security Report, 2025, shows how fast this is moving. In less than two years, AI moved from optional to foundational for most organizations. 98% percent of respondents have already adopted or are in the process of adopting AI, with only 2% resisting. That shift matters because LLM integration is no longer a competitive advantage; it is the baseline. Organizations that have not adopted AI are not being prudent, they are at risk of falling behind.

But adoption alone is only half the story. 24% percent of organizations are currently onboarding MCP security tools while their MCPs already serve users. In other words, many teams are deploying first and securing later.

The problem? Each of these models is a new interface - a dynamic, self-learning interface that interacts with real systems and real data.

When you integrate an LLM, you’re giving it context, memory, and access - sometimes even the ability to act. That means it can also be influenced, misled, or exploited.

We’ve already seen real incidents: chatbots leaking internal documents, copilots revealing source code, and AI assistants being tricked into executing harmful instructions.

These aren’t futuristic scenarios. They’re today’s reality - and they’re spreading faster than most AppSec teams can track.

Why LLM Discovery Must Come First

Before you can protect anything, you have to see it.

That was true for shadow APIs, and it’s even truer for LLMs.

LLMs show up everywhere: internal copilots, no-code workflows, Slack and Notion integrations, and third-party SaaS that silently embeds model calls. Developers can add model integrations with a few lines of code. A single script in a microservice might send sensitive data to an LLM API - and no one from security will ever know.

That’s why LLM Discovery has to become a new category in security visibility.

It’s about mapping:

- Which models are being used?

- What data do they touch?

- What tools or APIs might they call?

- Who has access and from where?

You can’t test or secure what you can’t see. Discovery is step zero - as always.

From DAST to LLM Testing: The Natural Evolution

DAST was about web applications.

API security testing extended that to structured, machine-to-machine interactions.

Now LLM security testing is extending it again - to semantic, contextual, and reasoning-based systems.

It’s the same story, just a more complex surface.

LLM security testing takes the same principles - probe dynamically, observe responses, detect unsafe behavior - but applies them to a system that thinks in words, not parameters.

That means instead of testing payloads, we test prompts.

Instead of injection attacks, we test instruction overrides.

Instead of SQLi, we look for data leakage through reasoning.

What LLM Security Testing Looks Like

LLM testing isn’t just about sending random prompts. It’s about understanding context.

Here are the key areas to test:

- Prompt Injection

Can an attacker trick the model into revealing secrets, bypassing rules, or changing its behavior? - Data Leakage

Does the model ever reveal sensitive information from its memory, logs, or context windows? - Tool and Plugin Abuse

If the LLM has access to APIs or databases, can it be manipulated into calling them in unintended ways? - Context Injection

In retrieval-augmented setups (RAG), can an attacker poison the context or control what’s being retrieved? - Output Control and Reliability

How deterministic is the model? Does it hallucinate or produce unsafe actions under stress?

These are no longer theoretical vulnerabilities - they’re the new OWASP Top 10 for LLMs.

Why Traditional Security Tools Fail Here

DAST can’t understand a “conversation.” SAST can’t interpret prompts. API scanners expect a fixed schema, while LLMs are probabilistic and contextual. A chatbot’s response may vary between runs. Its vulnerabilities aren’t code injections - they’re reasoning flaws. And these flaws can’t be patched by changing a line of code - they require guardrail tuning, context validation, and runtime observation.

That’s why the same evolution that brought us from DAST → API Testing → Contextual Pentesting must now continue toward AI-Native Security Testing.

LLM Discovery + Security = The Next Layer of Context-Aware Testing

LLM security cannot live in a silo. In practice, models sit on top of APIs. They call them, summarize their responses, and may even generate new API requests. Securing a model therefore means securing the entire chain: input, reasoning, tool call, API, and output.

Context-aware testing unifies API and model testing into a single workflow. It maps the data and logic flows end to end and tests how each link behaves under adversarial conditions. That unified view is how teams move from detection to prevention.

So securing an LLM means securing its entire chain of interactions:

Input → Reasoning → Tool Call → API → Output.

That’s why the future of AppSec is converging around context-aware testing - understanding the real data flow, logic flow, and model flow in one unified view.

Why This Matters Now

Our GenAI research makes this clear: 55% percent of respondents rank API security as their top concern for 2026, surpassing LLM-specific tools and traditional AppSec combined. Because LLMs aren’t replacing APIs; they’re wrapping them. Which means the old risks are still there - just hidden behind layers of natural language. As always, the organizations that take action early will have the advantage.

GenAI builds visibility, automates guardrails, and prevents the inevitable breaches before they hit headlines. Those who wait will discover too late that “the chatbot” wasn’t just a harmless interface - it was a gateway to sensitive systems.

The good news?

We already know how to deal with evolving attack surfaces. Now, we just have to extend the same discipline and context-awareness to the LLM era.

Final Thoughts

The future of security testing isn’t static; it’s adaptive.

Every generation of technology brings a new surface - and each time, the answer is the same: discover, test, secure.

LLM discovery and security testing are not academic ideas. They are the next essential practices in application security. The core principle remains the same: discover, test, secure. The surfaces have changed, but the discipline is familiar.

Because in the AI era, context is the new perimeter.

And if your models are part of the application, they deserve the same level of testing, visibility, and protection as before.

Learn more about how we discover LLM assets and run LLM security testing at Pynt.

.svg)

.png)